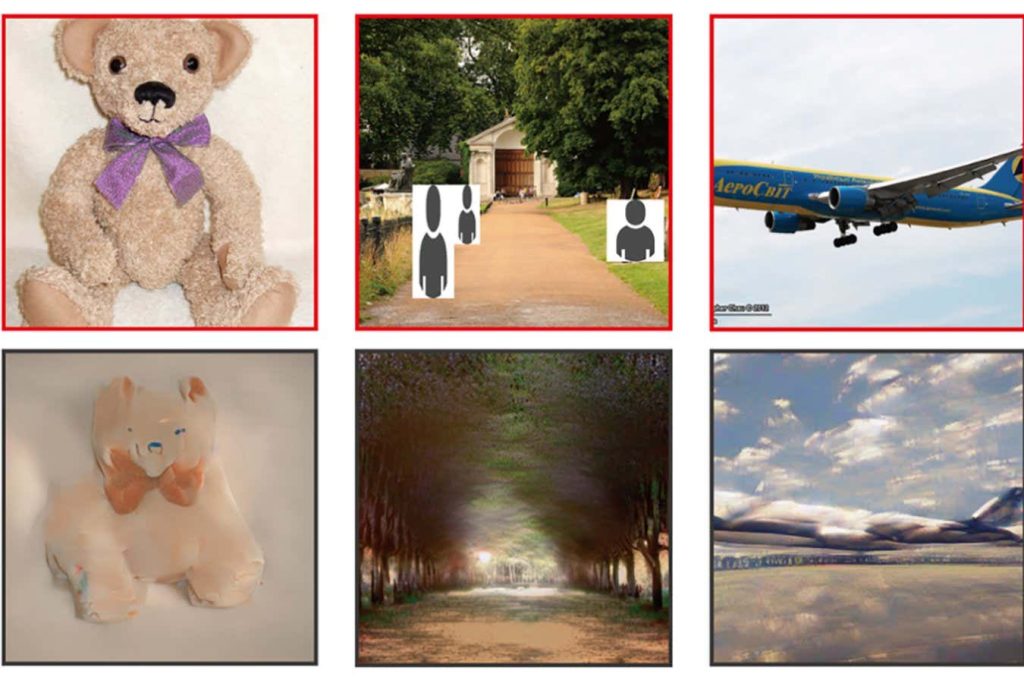

The images in the bottom row were recreated from the brain scans of someone looking at those in the top row

Yu Takagi and Shinji Nishimoto/Osaka University, Japan

A tweak to a popular text-to-image-generating artificial intelligence allows it to turn brain signals directly into pictures. The system requires extensive training using bulky and costly imaging equipment, however, so everyday mind reading is a long way from reality.

Several research groups have previously generated images from brain signals using energy-intensive AI models that require fine-tuning of millions to billions of parameters.

Now, Shinji Nishimoto and Yu Takagi at Osaka University in Japan have developed a much simpler approach using Stable Diffusion, a text-to-image generator released by Stability AI in August 2022. Their new method involves thousands, rather than millions, of parameters.

Advertisement

When used normally, Stable Diffusion turns a text prompt into an image by starting with random visual noise and tweaking it to produce images that resemble ones in its training data that have similar text captions.

Nishimoto and Takagi built two add-on models to make the AI work with brain signals. The pair used data from four people who took part in a previous study that used functional magnetic resonance imaging (fMRI) to scan their brains while they were viewing 10,000 distinct pictures of landscapes, objects and people.

Using around 90 per cent of the brain-imaging data, the pair trained a model to make links between fMRI data from a brain region that processes visual signals, called the early visual cortex, and the images that people were viewing.

They used the same dataset to train a second model to form links between text descriptions of the images – made by five annotators in the previous study – and fMRI data from a brain region that processes the meaning of images, called the ventral visual cortex.

After training, these two models – which had to be customised to each individual – could translate brain-imaging data into forms that were directly fed into the Stable Diffusion model. It could then reconstruct around 1000 of the images people viewed with about 80 per cent accuracy, without having been trained on the original images. This level of accuracy is similar to that previously achieved in a study that analysed the same data using a much more tedious approach.

“I couldn’t believe my eyes, I went to the toilet and took a look in the mirror, then returned to my desk to take a look again,” says Takagi.

However, the study only tested the approach on four people and mind-reading AIs work better on some people than others, says Nishimoto.

What’s more, as the models must be customised to the brain of each individual, this approach requires lengthy brain-scanning sessions and huge fMRI machines, says Sikun Lin at the University of California, Santa Barbara. “This is not practical for daily use at all,” she says.

In future, more practical versions of the approach could allow people to make art or alter images with their imagination, or add new elements to gameplay, says Lin.

Topics: